Jojy Cheriyan MD; PhD; MPH; MPhil

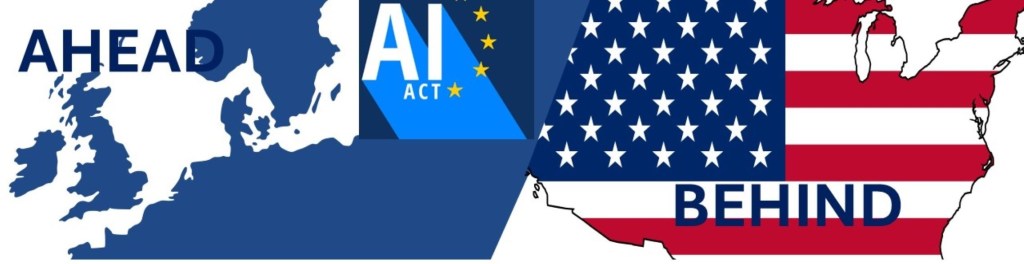

The European Union (EU) is leading the way in Artificial Intelligence (AI) regulation with the AI Act, which came into force on August 1, 2024. It is the first comprehensive legal framework specifically designed to regulate AI technologies. In contrast, the United States (U.S) has yet to implement a comprehensive national AI Act. The AI regulation in the U.S. remains fragmented, with sector-specific oversight handled by various federal agencies (e.g., FTC, FDA).

The EU’s approach is proactive and precautionary, focusing on a risk-based framework that categorizes AI systems by their potential harm (e.g., Unacceptable risk, High-risk, Limited-risk, Minimal or No-risk). The Act imposes strict rules on high-risk AI applications, such as those used in healthcare, law enforcement, and biometric identification, and outright banning systems that pose unacceptable risks, like real-time biometric surveillance. The U.S. has taken a lighter regulatory approach, focusing more on innovation, research, and voluntary guidelines rather than binding laws. While federal initiatives such as the National AI Initiative Act and the Blueprint for an AI Bill of Rights promote ethical AI practices, the lack of a unified legal framework leaves AI governance in a state of limbo.

The United States can learn several key lessons from the European Union’s AI Act, which came into force on August 1, 2024. The Act, being the first of its kind to provide comprehensive regulation on artificial intelligence, offers valuable insights into how to responsibly regulate AI while encouraging innovation. Here are the key takeaways for the U.S. to learn:

1. Risk-Based Framework

The EU’s AI Act uses a risk-based approach, classifying AI systems into categories like “unacceptable risk,” “high risk,” “limited risk,” or “minimal/no-risk.” This ensures that stricter regulations are imposed on systems that can have significant societal impacts (e.g., biometric surveillance, healthcare, hiring). The U.S. could adopt a similar approach, ensuring that high-risk AI applications receive more regulatory oversight while leaving room for innovation in low-risk areas.

2. Transparency Requirements

The Act mandates transparency for AI systems, especially those interacting with humans, which require clear disclosure that a person is interacting with an AI. Transparency is critical for trust, and the U.S. could adopt similar provisions, particularly in consumer-facing AI applications or high-stakes sectors like finance, healthcare, and law enforcement.

3. Human Oversight

The EU’s AI Act stresses the importance of human oversight in AI systems, particularly in high-risk areas. Human-in-the-loop systems ensure that decisions made by AI, especially in sensitive areas like law enforcement or healthcare, are monitored and validated by human professionals. The U.S. could learn from this to ensure that AI does not replace human judgment in critical fields, such as medical decision-making or judicial processes.

4. Accountability and Governance

The EU requires providers and users of high-risk AI systems to implement strong accountability mechanisms, including risk management systems, documentation, and compliance checks. The U.S. could strengthen its governance structures for AI by setting up similar accountability measures, such as mandatory audits and reporting for AI applications in sensitive industries.

5. Data Protection and Privacy

The EU AI Act dovetails with the GDPR (General Data Protection Regulation, enacted by EU in 2018) to ensure strong data protection rights are respected in the use of AI, especially with the use of personal data. The U.S., which lacks a federal privacy law, could take lessons from this integration to establish more robust data privacy protections for its citizens in AI applications, particularly with growing concerns about surveillance and bias.

6. Ethical and Societal Impact Considerations

The EU AI Act addresses ethical concerns by banning systems that violate fundamental rights, such as social scoring systems or real-time biometric surveillance in public spaces. The U.S. could enhance its AI regulatory framework by addressing similar ethical concerns and societal impacts, including the potential for algorithmic bias and discrimination.

7. Innovation Sandbox for Startups

The EU AI Act includes provisions for regulatory sandboxes that allow for experimentation in a controlled environment. This encourages innovation while ensuring compliance with regulations. The U.S. could adopt a similar model to foster innovation in AI while providing startups the flexibility to experiment responsibly.

8. AI for Public Good

The EU Act encourages the development and use of AI for public good, including healthcare, sustainability, and education. The U.S. could prioritize policies that incentivize the development of AI that solves pressing societal challenges, particularly in sectors like healthcare (e.g., AI in medicine), climate change, and social inequality.

9. International Collaboration

AI is a global issue, and the EU Act promotes international collaboration on AI standards. The U.S. could benefit from collaborating with other nations to create harmonized AI regulations that promote global innovation while addressing cross-border challenges such as data sharing, cybersecurity, and AI ethics.

Conclusion

Adopting some of these elements could enable the U.S. to implement a balanced, forward-thinking regulatory framework that promotes both innovation and responsibility in AI development. In summary, the EU is ahead with an established legal framework for AI, while the U.S. is taking a more decentralized and slower approach, focused on fostering innovation alongside ethical guidance rather than enforceable regulations. Policymakers are balancing concerns about overregulation potentially stifling innovation with the need to address the societal risks posed by AI. As of now, efforts toward a comprehensive AI law still remain in early discussions. The delay in enacting a comprehensive AI Act in the U.S. increases the risk of legal uncertainty, regulatory conflicts, privacy violations, discrimination claims, and liability disputes, which could harm both businesses and consumers. A clear regulatory framework could mitigate these risks by establishing accountability, safety standards, and ethical guidelines for AI deployment.

References:

- European Commission. (2023, January 26). A European approach to Artificial intelligence | Shaping Europe’s digital future. Digital-Strategy.ec.europa.eu. https://digital-strategy.ec.europa.eu/en/policies/european-approach-artificial-intelligence

- Press. (2024, July 15). Press room | News | European Parliament. Europa.eu. https://www.europarl.europa.eu/news/en?searchQuery=Artificial%20Intelligence

- Stanford Law School. (2019). Beyond AI & Intellectual Property: Regulating Disruptive Innovation in Europe and the United States – A Comparative Analysis | Stanford Law School. Stanford Law School; Stanford Law School. https://law.stanford.edu/transatlantic-technology-law-forum/projects/beyond-ai-intellectual-property-regulating-disruptive-innovation-in-europe-and-the-united-states-a-comparative-analysis/

- Selbst, A., Anthony, D., Bambauer, J., Bloch-Wehba, H., Boyd, W., Carlson, A., Cohen, J., Gilman, M., Brennan-Marquez, K., Geczy, I., Glater, J., Guggenberger, N., Hirsch, D., Kaminski, M., Kim, P., Solow-Niederman, A., Loo, R., Verstein, A., Volokh, E., & Waldman, A. (2021). AN INSTITUTIONAL VIEW OF ALGORITHMIC IMPACT ASSESSMENTS. Harvard Journal of Law & Technology, 35. https://jolt.law.harvard.edu/assets/articlePDFs/v35/Selbst-An-Institutional-View-of-Algorithmic-Impact-Assessments.pdf

- Engler, A. (2022, June 8). The EU AI Act will have global impact, but a limited Brussels Effect. Brookings. https://www.brookings.edu/articles/the-eu-ai-act-will-have-global-impact-but-a-limited-brussels-effect/

The content provided on this blog, “Medical and Healthcare Insights,” is for informational purposes only and is not intended as a substitute for professional medical advice, diagnosis, or treatment. The views and opinions expressed in the blog posts are those of the author and do not necessarily reflect the official policy or position of any healthcare institution, organization, or employer. Readers are encouraged to consult with qualified healthcare professionals for any health-related questions or concerns. The author and the blog are not responsible for any errors or omissions, or for any outcomes related to the use of this information. Use of this blog and its content is at your own risk.

Leave a comment